Astronomers use measurements called magnitudes to describe the brightness of objects in the sky. When early astronomers first started to record the properties of stars, the technology did not exist to measure amounts of light in a precise way. As a result, observers developed the magnitude system, which compares one star’s brightness to another. When you say one person is twice as tall as another, no units are needed. Similarly, when we measure the magnitude of an object in the sky, we don’t attach a unit (like kilograms, meters, or seconds) to the end. The bright star Vega is commonly used as the standard comparison star, and it is defined to have an apparent magnitude of approximately zero.

Astronomers often refer to two basic types of brightness measurements:

Apparent magnitudes measure how bright or faint something appears from our perspective on the Earth. Bear in mind that since objects in the sky can appear faint to us simply because they are far away, the apparent magnitude alone doesn’t tell you how truly luminous an object is.

Absolute magnitude is used to quantify how bright an object would appear if it were at a standard distance of 10 parsecs away. Absolute magnitude measurements are particularly useful because they allow us to compare the true, intrinsic brightness of different objects. The only problem is, absolute magnitudes can be difficult to determine, because they require knowing an object’s distance from the Earth.

For simplicity, we’ll rely on apparent magnitudes for most of the Voyages activities – but just remember that these measurements don’t give the true luminosity of an object, just how bright it appears from afar, at our location.

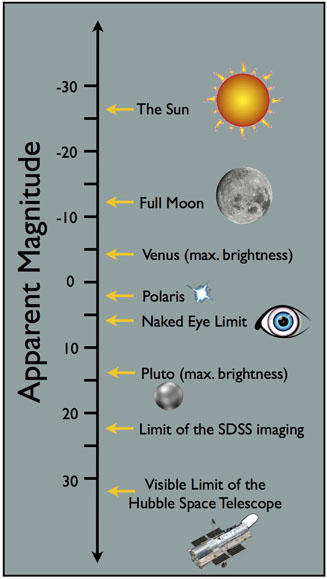

For historical reasons, objects in the sky (such as other stars) that appear fainter than the star Vega have positive apparent magnitudes; likewise, objects in the sky (like the full moon) that appear brighter than Vega have negative apparent magnitudes. In the case of the full moon, the apparent magnitude is -13. The Sun has a whopping apparent magnitude of -27! Venus, which you can often see even in the morning sky, is quite bright… with an apparent magnitude around -5. But Pluto, which you couldn’t see without a telescope, has an apparent magnitude of 14.

So, the magnitude scale runs in the opposite direction to what you might expect… the larger the magnitude, the fainter the object! Think of the magnitude scale as a race with the winners coming in first. It is easier to think of the brightest stars in a brightness race in first place and the dimmer objects in 4th, 5th, or even 23rd place.

The SDSS records five different magnitudes for each object. To understand this more clearly, imagine that the SDSS telescope has five different pairs of colored glasses to put on before looking at the sky. These “glasses” are called filters. Stars and galaxies can have different magnitudes depending upon which filter is being used to measure the brightness. The SDSS reports magnitudes measurements in all five filters. If you want to know more about the SDSS filters, please see our Filters Pre-Flight training page.

For our Voyages activities, we recommend choosing one of the filter magnitudes as a measurement of object brightness and sticking with that same choice throughout.

Calculating Apparent Magnitudes

You might be wondering what exactly the number you read off for an apparent magnitude tells you about the relative brightness of different objects. Magnitudes are built on what’s known as a logarithmic scale which allows us to compare objects with vastly different brightness without using incredibly large numbers. The magnitude scale works in such a way that an increase of 1 in magnitude corresponds to a decrease in brightness by a factor of about 2.5. In other words, an object with a magnitude of 5 is 2.5 times fainter than an object with a magnitude of 4.

The physical property that magnitude actually measures is flux, the amount of light that arrives in a given area on Earth in a given amount of time. Abbreviated as f, flux relates to SDSS magnitudes, m, in the following way: TODO: reformat as latex for readability (and accessibility?)

m = 22.5 – 2.5 x log10(f)

The zero-point of this scale (the relative point to which other brightnesses are compared) is the flux of a standard source, which has a magnitude defined to be 22.5.

A variation of the equation above can be used to relate the difference between any two objects’ magnitudes (m1 and m2) to their flux ratio, f1/f2:

m1 – m2 = -2.5 x log10 (f1/f2)

The Sun, which is 14 units of apparent magnitude brighter than the full moon, is almost 400,000 times brighter if you compare the intensity of their light directly (this is probably not surprising, since we can safely look at the moon but not at the Sun). So now you can appreciate how magnitudes help us compare objects in the sky that have extremely different brightnesses without having to use enormous numbers.

Relating Apparent and Absolute Magnitudes

As we discussed already, the apparent brightness of an object depends on its distance from us. As a luminous object moves farther away, it appears less bright. Therefore, if we want to know how truly bright an object is, we need to know its distance.

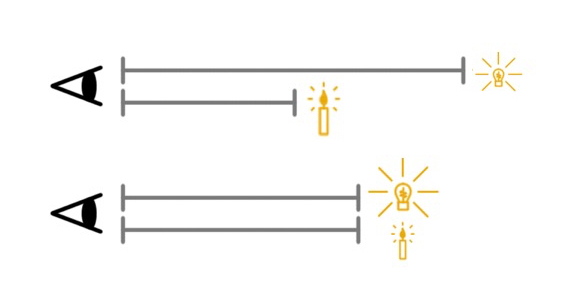

Imagine a light bulb with a greater luminosity than a candle, meaning it emits more light per second compared to the candle. The light bulb would appear brighter than the candle if it is held at the same distance from an observer; but the candle and the light bulb could have the same apparent brightness if the light bulb is much farther away.

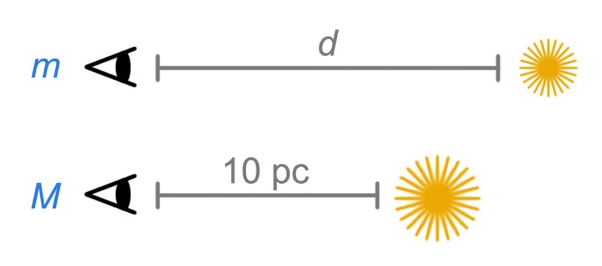

The apparent magnitude, m, measures how bright an object appears, given its distance from Earth.

The absolute magnitude, M, measures how bright the same object would appear if it were exactly 10 parsecs away.

Distance, apparent magnitude, and absolute magnitude are related with the “distance modulus” equation:

m – M = 5 log10(d) – 5

where M represents the absolute magnitude, m represents the apparent magnitude, and d is the distance to the object in parsecs. The logarithm is base 10.